OpenAI

- 샘 알트만이 2015년 12월 11일 설립

- 인공지능이 인류에 재앙이 되지 않고, 인류에게 이익을 주는 것을 목표로 함

- Microsoft가 투자를 받고, 독점 라이선스 제공

- 비영리 기업으로 시작했으나 현재는 한계 영리기업 형태

ChatGPT

- 초거대 언어모델 GPT-3.5 기반 대화형 인공지능 챗봇

- 채팅을 통해 질문을 입력하면 사람처럼 답을 해주는 서비스

- 아주 많은 파라미터를 가진 Transformer 기반 언어모델을 아주 많은 데이터로 학습

Language Model 언어 모델

- 언어 모델은 단어에 확률을 부여해서 문장이 얼마나 자연스러운지를 평가할 수 있는 모델

- 이전의 문맥을 바탕으로 다음 또는 특정 위치에 적합한 단어를 확률적으로 예측

- 언어 모델의 평가 지표는 텍스트의 PPL(Perplexity)을 사용

Ngram > RNN(1986) > LSTM(1997) > Transformer(2017)

N-gram

- 텍스트에서 n개의 단어 시퀀스의 확률만이 고려되는 매우 간단한 언어모델

- 코퍼스에 나오지 않은 단어 시퀀스의 확률을 정확하게 추정하지 못함

- 이전 n-1개의 문맥만 고려하므로 긴 시퀀스에 적합하지 않음

RNN

- Recurrent의 개념을 적용한 Neural Network 기반 언어모델

- RNN, LSTM, GRU 등의 네트워크를 사용

- N-gram 보다 긴 문맥을 기억할 수 있고, Unseen 확률도 더욱 정확

Transformer

- Attension Is All you Need, 2017 논문에서 제안한 방법

- 높은 Scalability 로 대형 언어모델에서도 안정적인 학습이 가능

- 범용성도 높아서 텍스트 외에도 음성, 영상에서도 사용되고 있음

Search

- Greedy Search : 가장 확률이 높은 단어의 경로만 생존

- Beam Search

Sampling

- Top-K Sampling: 높은 확률의 K개 단어 중에서 샘플링

- Top-p Sampling: 확률의 누적합이 p 이상 되도록 하는 단어 샘플링

GPTS

InstructGPT

- 등장 배경 : GPT3 결과는 도움이 안되고, 사실이 아닌 결과를 출력 + 해로운 결과를 제공

Step 1: Supervised fine-tuning (SFT)

- 프롬프트 선택: 데이터셋에서 다양하고 적합한 프롬프트 선택

- 작업자의 답변 작성: 서비스 정책에 맞는 적합한 답변 작성

- 모델 학습: 구축된 데이터로 GPT-3를 미세 조정

Step 2: Reward Model Training

- 응답 데이터 생성: SFT 모델에서 프롬프트에 대한 여러 개의 답변을 생성

- 작업자의 순위 및 점수 작성: 각 응답에 대한 순위와 점수를 작성

- 보상 모델 학습: 응답의 보상을 예측하는 모델 학습

Step 3: Policy Training (LM Training)

- 학습용 프롬프트 데이터 선정

- 언어 모델 업데이트 및 답변 생성: 손실 함수에 따라 모델을 업데이트, 새로운 모델로 답변 데이터 생성

비감독 사전학습 (Un-supervised Pre-training)

- Wav2Vec 같은 비감독 사전학습 기술이 주목받고 있음

- 사람이 만든 라벨이 필요 없으므로 수백만 시간까지 데이터가 사용 가능

- 비감독 사전 학습 모델을 파인 튜닝해서 특히 적은 데이터에서 SOTA를 달성

Motivation

비감독 사전 학습의 한계

- 음성인식을 위해 파인튜닝 필요

- 머신 러닝이 우수하지만 다른 데이터에서 성능 낮음

- 머신 러닝이 사람이 인지하지 못하는 데이터의 특성을 악용하고 있기 때문

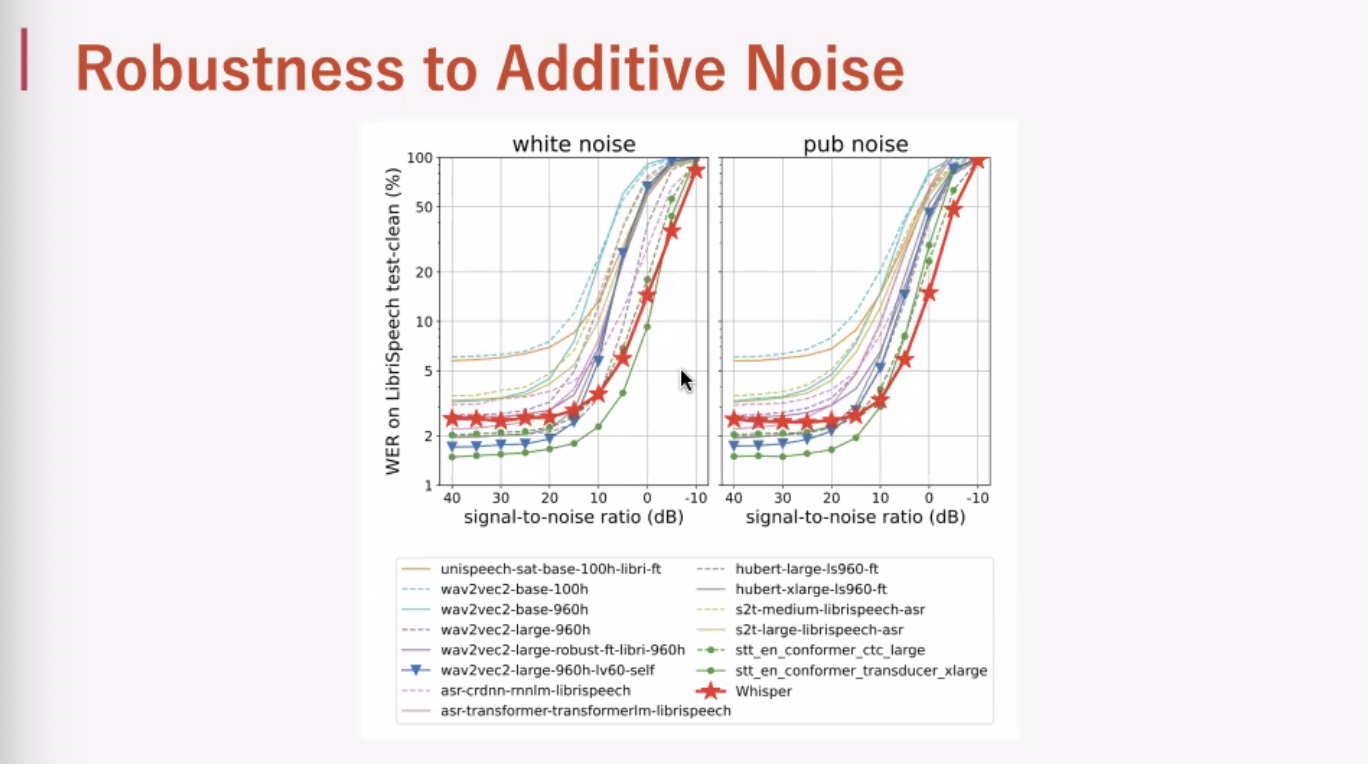

Whisper

- 음성인식의 최종 목적은 모든 데이터에서 파인 튜닝이 없이 안정적으로 동작하는 것

Data Preprocessing

- 인터넷에서 전사가 있는 음성 데이터를 구축했다. : 다양한 화자, 언어, 환경이 포함, 인식기를 강건하게 만들어 줌

- 전사 오류가 많아서 자동 필터링을 개발 : 인식기가 만들어낸 전사를 걸러야 했음

- 언어 검출기 개발 : 음성 언어 검출기(VoxLingua107 사용) 개발, 전사 텍스트로 CLD2에서 나온 언어와 다르면 학습데이터 제외, 전사 언어가 영어면 번역 데이터로 사용

- 음성은 30초 단위로 자르고 전사도 분리 : 잘랐는데 전사가 없는 음성도 VAD 학습 데이터로 사용

Long-form Transcription

- 30초 세그먼트로 연속적인 전사를 수행

- 모델로 추정된 시간 스탬프에 따라서 window shifting을 한다.

- 안정적으로 긴 오디오를 전사하기 위해서 빔서치와 "반복과 모델 예측 로그 확률"에 기반한 temperature scheduling이 중요

Comparison with Human Performance

- 테스트셋에서 남은 개선량을 위해서 전문가의 전사능력과 비교

- Whisper는 사람한테 아주 가까운 성능을 보인다.

Limitations and Future Work

Current limitations of Whisper

- Inaccurate timestamps

- Hallucinations

- Low performance on low-resource languages

- No speaker recognition

- No real-time transcription

- Pure PyTorch inference

Whisper 설치 & 사용 방법

결과